The JAIS Family of Models

The JAIS family of models is a comprehensive series of bilingual English-Arabic large language models (LLMs). These models are optimized to excel in Arabic while having strong English capabilities. We release two variants of foundation models including models pre-trained from scratch and models pre-trained adaptively from Llama-2. Find all of our models below and learn more on Hugging Face.

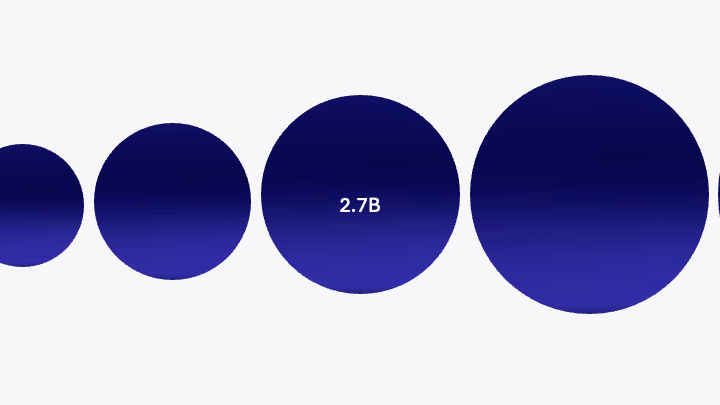

Pre-trained Models

JAIS 590M

590 million parameter Arabic-centric bilingual model trained from scratch on 480 billion tokens.

JAIS 1.3B

1.3 billion parameter Arabic-centric bilingual model trained from scratch on 480 billion tokens.

JAIS 2.7B

2.7 billion parameter Arabic-centric bilingual model trained from scratch on 480 billion tokens.

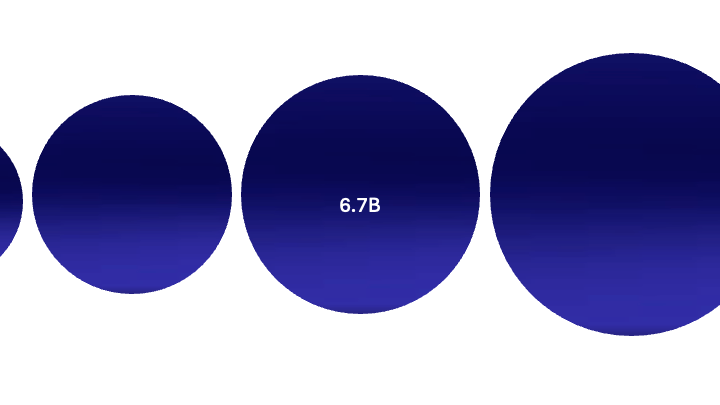

JAIS 6.7B

6.7 billion parameter Arabic-centric bilingual model trained from scratch on 480 billion tokens.

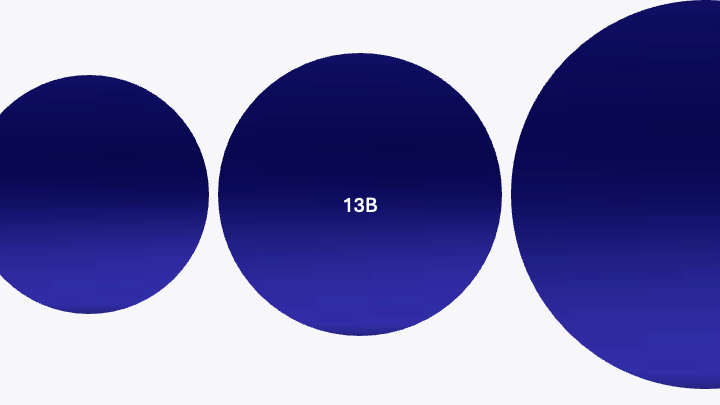

JAIS 13B

13 billion parameter Arabic-centric bilingual model trained from scratch on 480 billion tokens.

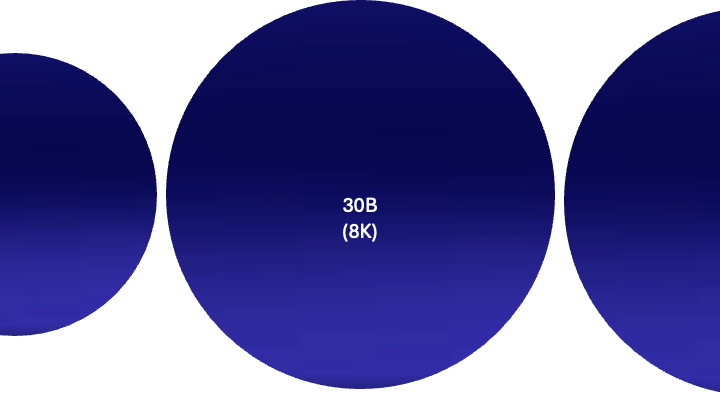

JAIS 30B (8K)

30 billion parameter Arabic-centric bilingual model trained from scratch on 1500 billion tokens.

JAIS 30B (16K)

30 billion parameter Arabic-centric bilingual model trained from scratch on 1666 billion tokens.

Adapted Models

JAIS 7B

7 billion parameter Arabic centric model trained adaptively on Llama2 with 19 billion Arabic tokens.

JAIS 13B

13 billion parameter Arabic centric model trained adaptively on Llama2 with 140 billion Arabic tokens.

JAIS 70B

70 billion parameter Arabic centric model trained adaptively on Llama2 with 334 billion Arabic tokens.

Frequently Asked

Questions

Where can I find information about JAIS terms of use

Find information about JAIS terms of use here.